Manufacturers who want to stay competitive in the fast-evolving global market must learn how to pair humans’ judgment, creativity, and ingenuity with the strength, precision, and speed of robots. More and more, the industry is combining human ingenuity and flexibility with robot-powered processes, with very apparent results.

Studies by MIT’s Julie Shah even found that pairing humans with a robot reduces idle time by 85%, compared to working in an all-human team. This is a testimony that manufacturing processes can be made faster, more efficient, and cost-effective when humans collaborate with robots.

But how fast is the cobot market growing?

According to a new report by technology intelligence firm ABI Research, the cobot market is expected to reach US$7.2 billion by 2030. This prediction suggests we are more likely to see more robots working alongside human workers.

As we expect more robots to work side-by-side with humans, we also expect human-robot teams to evolve with time. This article explores the future of human-robot teams.

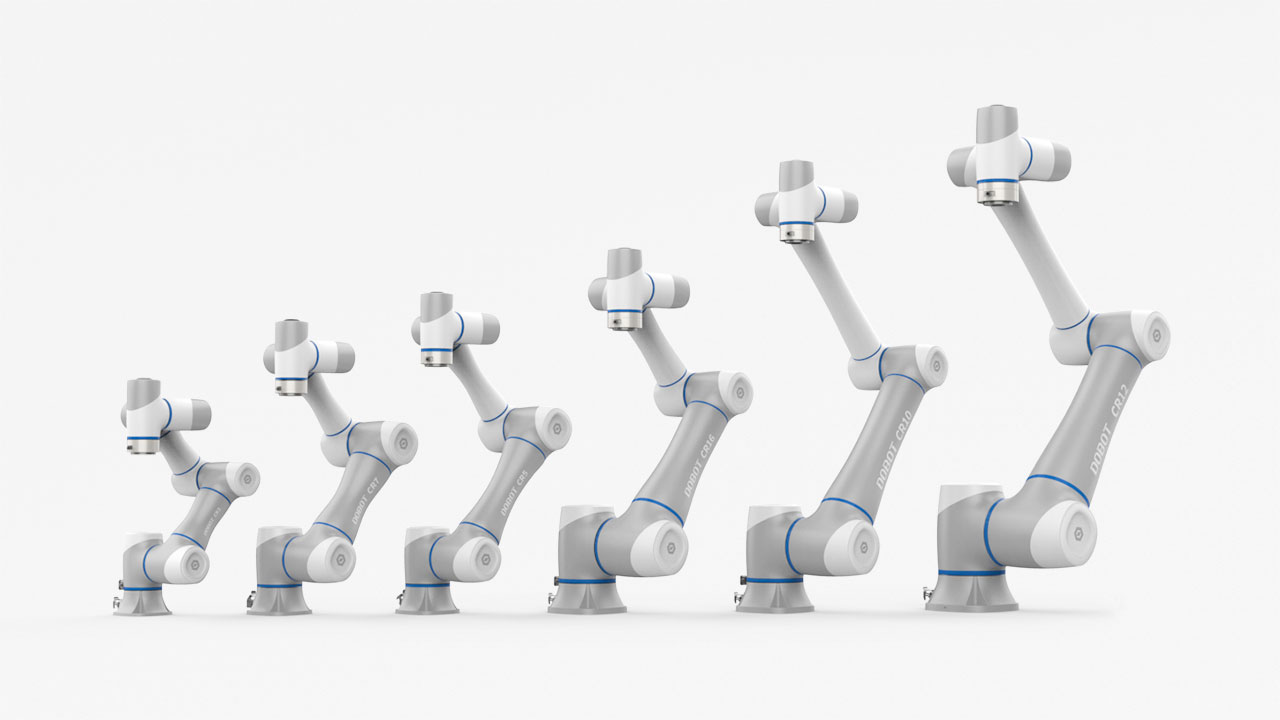

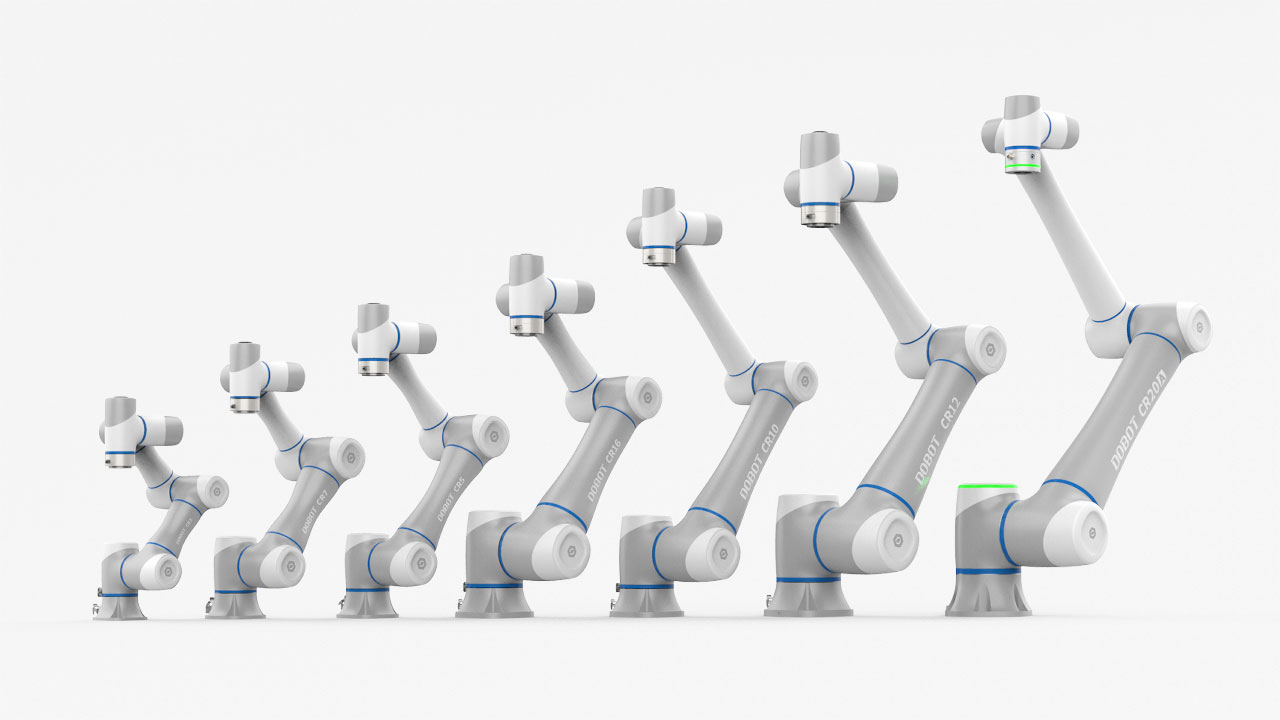

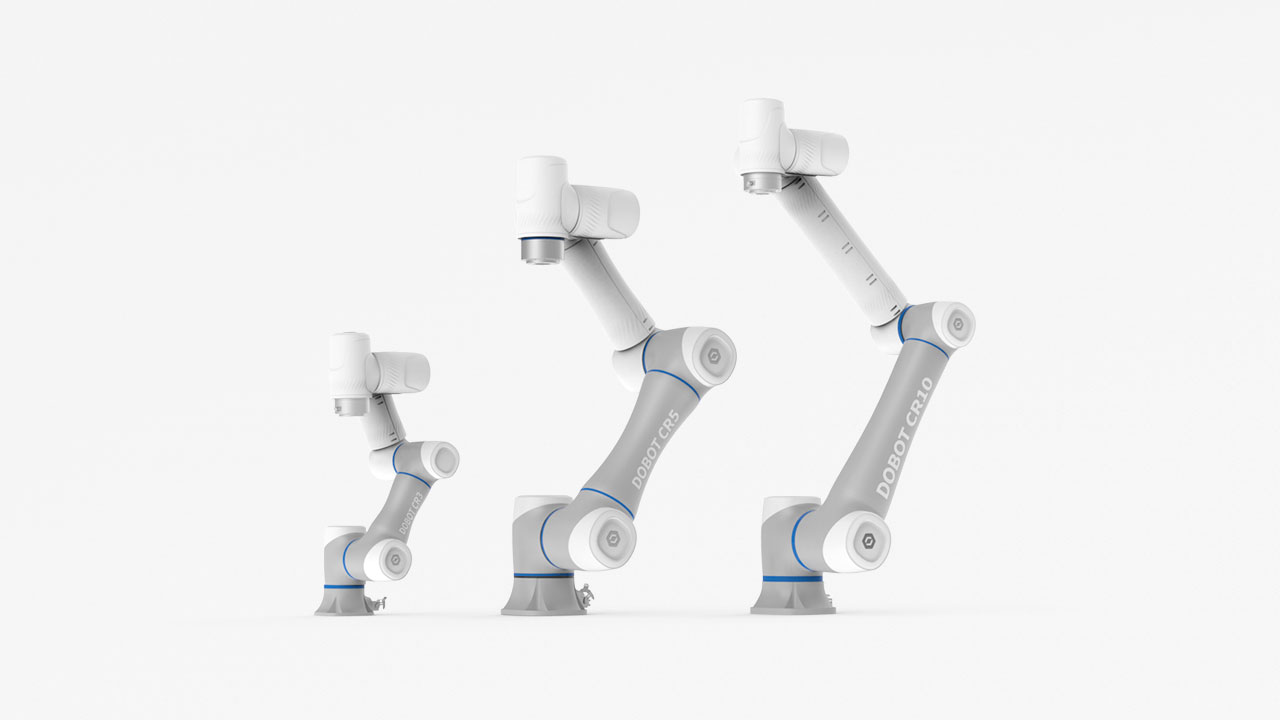

Manufacturers are increasingly turning to cobots to enhance efficiency and meet increased demand. These robots for the manufacturing industry balance adaptability, safety, and affordability, making them a perfect choice for small-to-medium enterprises (SMEs) looking to embrace automation without overhauling operations.

Cobots can augment what human workers do and allow them to focus on safer, more creative, and repetitive assignments without sacrificing efficiency.

With time, cobots will likely experience significant advancements. As technology evolves, collaborative robots in manufacturing will likely become more agile, more intelligent, and capable of performing more intricate tasks.

The integration of technologies such as AI and machine learning, for example, will enable real-time data analysis and remote monitoring.

There are many possibilities when looking at the future of human-robot teams. They include:

Increased Use of Artificial Intelligence and Machine Learning Technologies

These technologies are critical in helping robots for the manufacturing industry learn from human workers to improve efficiency and productivity in the manufacturing processes.

AI enhances the functionality of collaborative robots in manufacturing and makes them efficient, adaptable, and more user-friendly.

Machine learning, on the other hand, allows robotics in manufacturing to learn from data instead of having to be programmed for every task. This means that robotics in manufacturing can adapt to changes in the manufacturing process and improve efficiency without human intervention.

Robots for the manufacturing industry can use machine learning to optimize their movements and reduce the time taken to complete tasks. These industrial robots can also learn to recognize patterns and predict outcomes, which is an invaluable feature in quality control applications.

Improved Human-Robot Interaction

The interaction between cobots and human workers will be enhanced through machine learning and natural language processing (NLP).

NLP, for example, may allow collaborative robots in manufacturing to comprehend and respond to verbal commands, while machine learning may enable robotics in the manufacturing industry to adapt to human behavior. In the end, both technologies will work towards fostering a more intuitive and collaborative work environment.

The result is that humans and robotics in manufacturing may begin pursuing shared goals alongside one another.

Currently, the human-cobot relationship is somewhat linear, and the robot mainly performs the same actions. However, with artificial intelligence, this cooperation may allow robotics in manufacturing to analyze real-time environmental data and adapt their behavior to their surroundings.

Examples include adjusting speed based on proximity to human workers or changing movement when a human moves. This will lead to efficient collaboration without the need for physical barriers.

Robots for the manufacturing industry may also get better at understanding and interpreting human speech, gestures, and even emotions, paving the way for a more intuitive interaction. This means that human workers may be able to issue more commands, eliminating complex programming steps.

A customized user experience is also a possibility. AI may enable robotics in manufacturing to offer a personalized experience by learning individual workers’ working styles and preferences. This customization will enhance user satisfaction and efficiency as robots for the manufacturing industry will be able to tailor their actions and responses to better align with the individual needs of different workers.

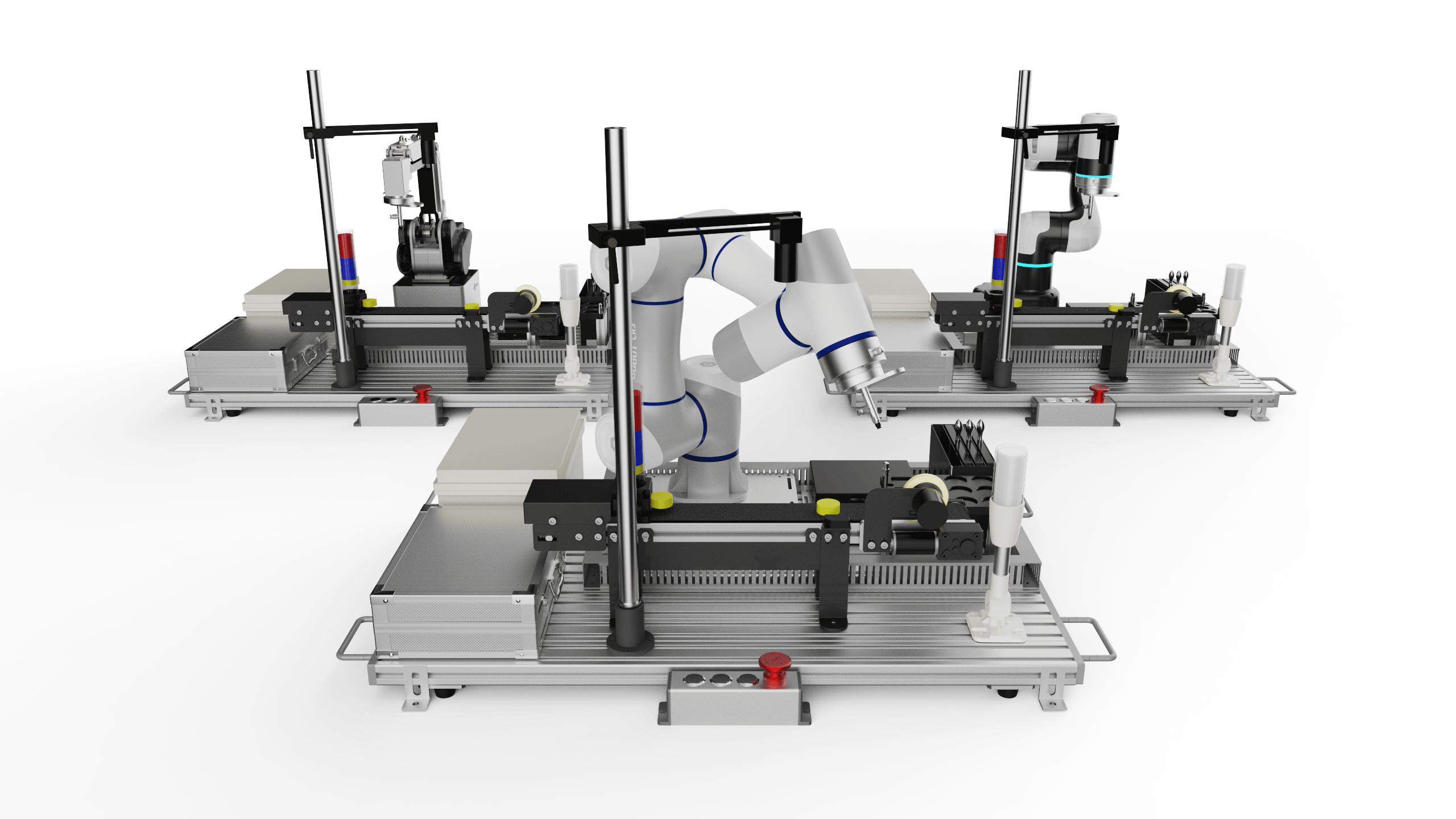

Autonomous Mobile Manipulation Robot (AMMR)

The autonomous mobile manipulation robot (AMMR) solution features proactive safety measures, precise navigation, and easy-to-use software that helps it perform transporting, manipulating, and mobility tasks. It uses an automobile base and CR series collaborative robots, and is more flexible when reacting to changes to production lines.

In addition to using a graphical user interface for map construction, it is easy to use and connects instantly to several grippers and 2D/3D vision modules.

The AMMR system supports vision-based automatic calibration to achieve ±0.5 mm accuracy, and its embedded laser SLAM algorithm achieves repeatability of up to ±5 mm. For better protection, it uses two dual LiDAR sensors that scan 360 degrees for safety and offer customizable safety zones to meet the needs of various application scenarios.

It can be used to address challenges such as labor shortages and the need to optimize workflows. One example is when it was used to address palletizing efficiency and density.

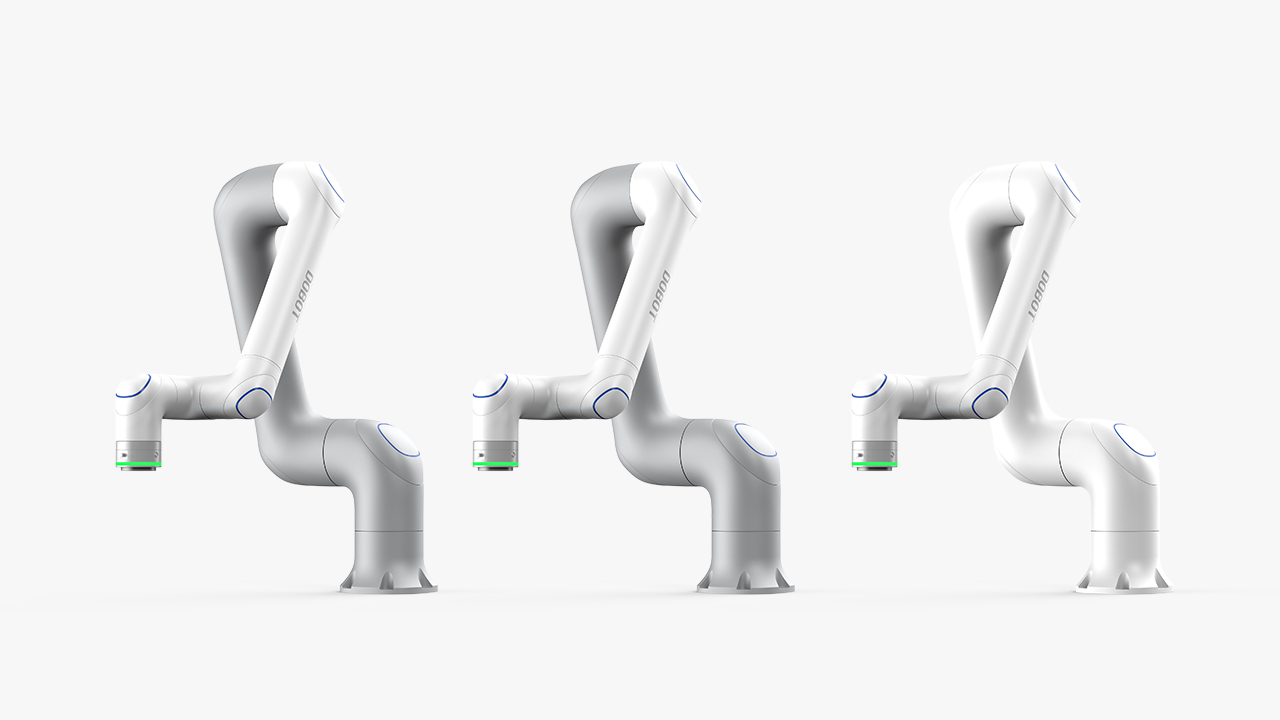

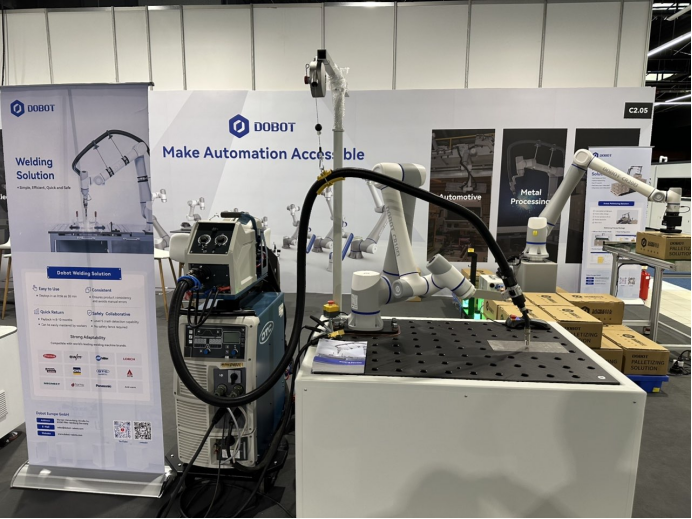

DOBOT CR10A (AWS re:Invent 2024)

The power of AI was also showcased during Amazon's AWS re:Invent 2024 when DOBOT partnered with the giant retailer for an interactive chess experience. This event merged advanced robotics with generative AI capabilities, allowing users to engage in dynamic chess matches against AI models powered by Amazon Bedrock.

The setup consisted of two robotic arms controlled by foundational AI models, and their precise movements were processed by AI, which detected moves on the smart chessboard. The arms in question were DOBOT CR10A cobots, which are equipped with precise motion control and computer vision, and these robots in manufacturing examples were able to track and move the chess pieces based on real-time decisions from Amazon’s AI.

The arms translated AI-driven strategies into accurate, fluid movements and adjusted accordingly as the game progressed. This showcased how DOBOT robots can perform complex tasks when embodied with AI decision-making.

The event highlighted the possibility of integrating physical robots with generative AI in decision-making and strategic thinking.

Enhanced Safety

Collaborative robots in manufacturing are typically designed with advanced security capabilities that enable them to perceive and react to their surroundings. One such feature is collision detection, which allows the robot to stop its operations when it comes into contact with a human worker, and this helps prevent injury or damage.

Researchers from the KTH Royal Institute of Technology are working to develop context-aware robots that can predict a human’s upcoming posture and understand their current position. Their goal is to prevent workplace accidents by creating collision-free human-robot collaboration.

Another notable research is from the University of British Columbia’s Okanagan campus, where researchers wanted to create a “human-robotic dream team.” They did so by pioneering robots that can perceive environments akin to human capabilities, with their vision being a future where humans and machines collaborate beyond the confines of factory settings.

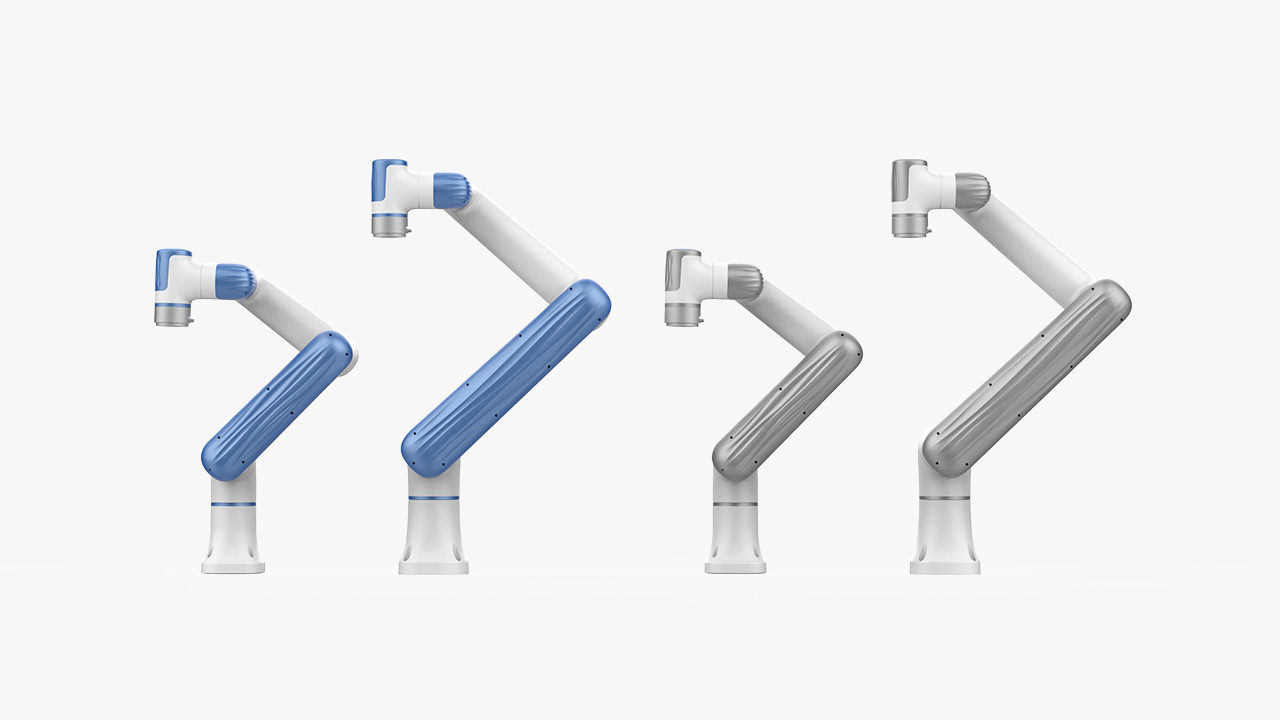

CR5S and SafeSkin

DOBOT collaborative robots use 2D and 3D camera vision to inspect quality and test electronics production lines. A robot like the CR5S can perform intelligent vision-guided sorting with advanced 3D vision AI. It can accurately grasp, sort, and identify a mix of mirrored and transparent workpieces, proving its precision in handling complex tasks and safety. The vision technology detects any object or human and helps prevent injuries.

DOBOT CR5S cobot performs in 3D Vision AI

56 Magician Robots: 2019 Shenzhen Spring Festival Gala

DOBOT’s SafeSkin, available in robots like the CRA series, is a contactless pre-collision technology that redefines human-robot collaboration. It detects obstacles within 10-20cm and responds in just 10 milliseconds, eliminating the need for preemptive slowdowns. This innovative detection solution allows these industrial robots to operate at a speed of up to 1m/s, boosting productivity 4x, compared to traditional robots.

The technology delivers 360-degree protection with plug-and-play flexibility without requiring additional workspace.

While this technology is already so good, we may see extra security as robots for the manufacturing industry continue to interact with humans. One example is an AI-powered vision system that can recognize the presence of a human and adjust movements accordingly. Machine learning models may also detect unsafe conditions and initiate preventative actions to protect humans.

In the future, AI-powered safety systems can immediately notify workers and management of any pending risks and shut down.

Smarter Decision-Making and Adaptability

AI may improve the cobot’s capability to process data from several sensors, allowing it to accurately understand its surroundings, detect changes, and recognize objects. This enhanced sensory perception will enable better object manipulation and interaction. It will also allow them to work closer to human workers without compromising safety.

Continuously collecting and processing data means collaborative robots in manufacturing will get better over time, and as a result, their flexibility and adaptability will increase. As the cobot’s adaptive capabilities improve, object recognition will become more accurate.

Advanced image recognition and sensory technologies, combined with AI, will allow objects to be detected and identified more accurately. Therefore, more cobots may be needed in tasks requiring high precision, such as accurately assembling small components.

56 Magician Robots: 2019 Shenzhen Spring Festival Gala

DOBOT robots’ performance at the 2019 Shenzhen Spring Festival Gala was a testament to what can be achieved with sensory intelligence. 56 Magician robots, controlled together via an external I/O interface, were programmed to match the melody and to operate offline. Once the instructions were delivered, the robots swung in unison while waving the glow sticks and dancing to the rhythm.

56 robotic arms are waving the glow sticks in their hands:

Besides dancing, DOBOT robots can see, feel, listen, and demonstrate abilities through infrared and GPS technologies as long as they are equipped with the right sensors.

The Need for Cloud-Connectivity to Enhance Data Monitoring and Analytics

Integration with cloud technologies is likely to make cobots even more intelligent than they already are. Cloud connectivity will allow real-time data monitoring, predictive maintenance, remote troubleshooting, analyzing performance metrics, optimizing workflows, and reducing operational risks. Cloud connectivity will also enable seamless integration with other innovative technologies.

Backing up your log files and other diagnostic or calibration files means you can access them whenever needed. For example, when you need to troubleshoot the robot. It also makes it easier to get support since you can easily share the files online and avoid time-consuming onsite visits.

Backed-up data opens the possibilities of predictive maintenance and optimization using AI-based self-diagnosis. The robot may be able to monitor and predict its performance and maintenance needs and even predict when new parts are needed.

Having data in the cloud can also enable integration with other AI systems, such as manufacturing execution systems (MES) or enterprise resource planning (ERP). This translates to seamless data exchange and coordination across different production processes and promises more efficiency.

There are many possibilities for future human-robot teams, especially as technology advances.

The integration of robots with AI and machine learning, for example, represents a significant step forward in automation. As these technologies evolve, we can expect even more efficiency and effectiveness in human-robot teams.